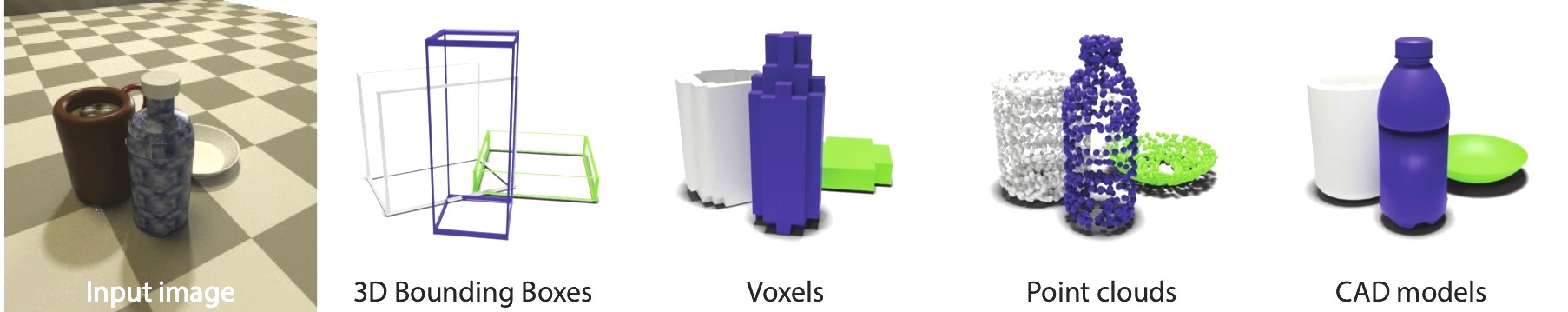

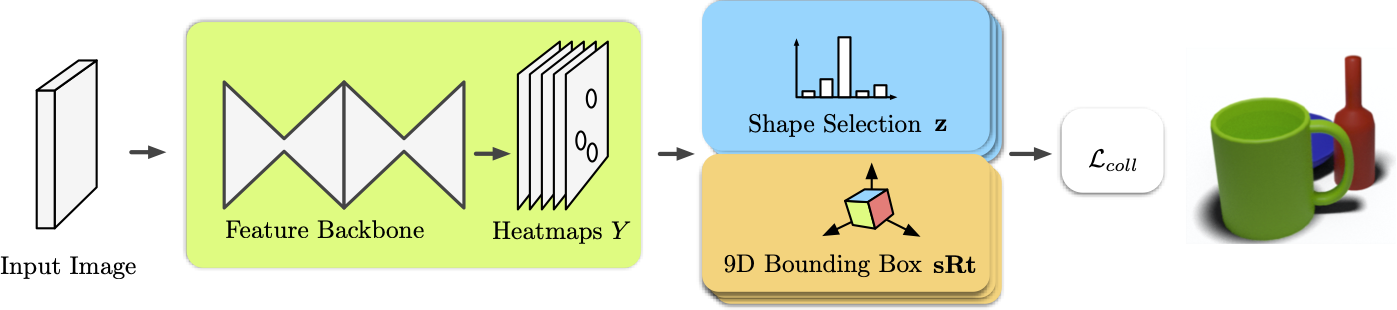

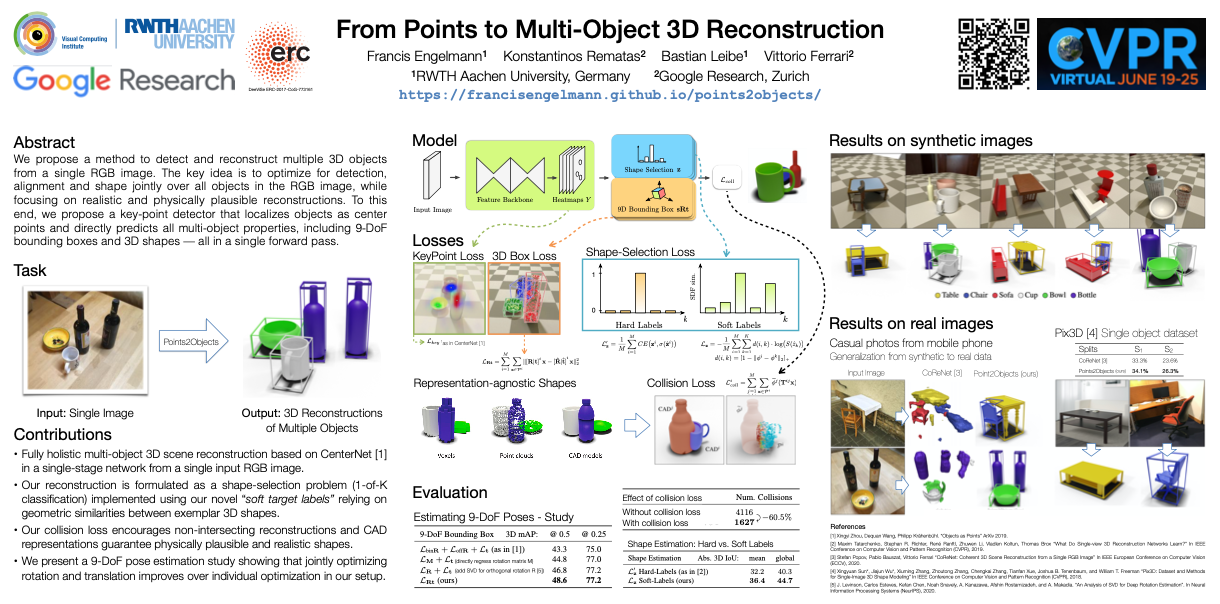

We propose a method to detect and reconstruct multiple 3D objects from a single RGB image. The key idea is to optimize for detection, alignment and shape jointly over all objects in the RGB image, while focusing on realistic and physically plausible reconstructions. To this end, we propose a keypoint detector that localizes objects as center points and directly predicts all object properties, including 9-DoF bounding boxes and 3D shapes -- all in a single forward pass.

Model

Overview of the proposed approach: Given a single RGB image, our model detects object centers as key-points in a heatmap. The network directly predicts shape exemplars and 9-DoF bounding boxes jointly for all objects in the scene. The collision loss favors non-intersecting reconstructions. Our method predicts lightweight, realistic and physically plausible reconstructions in a single pass.

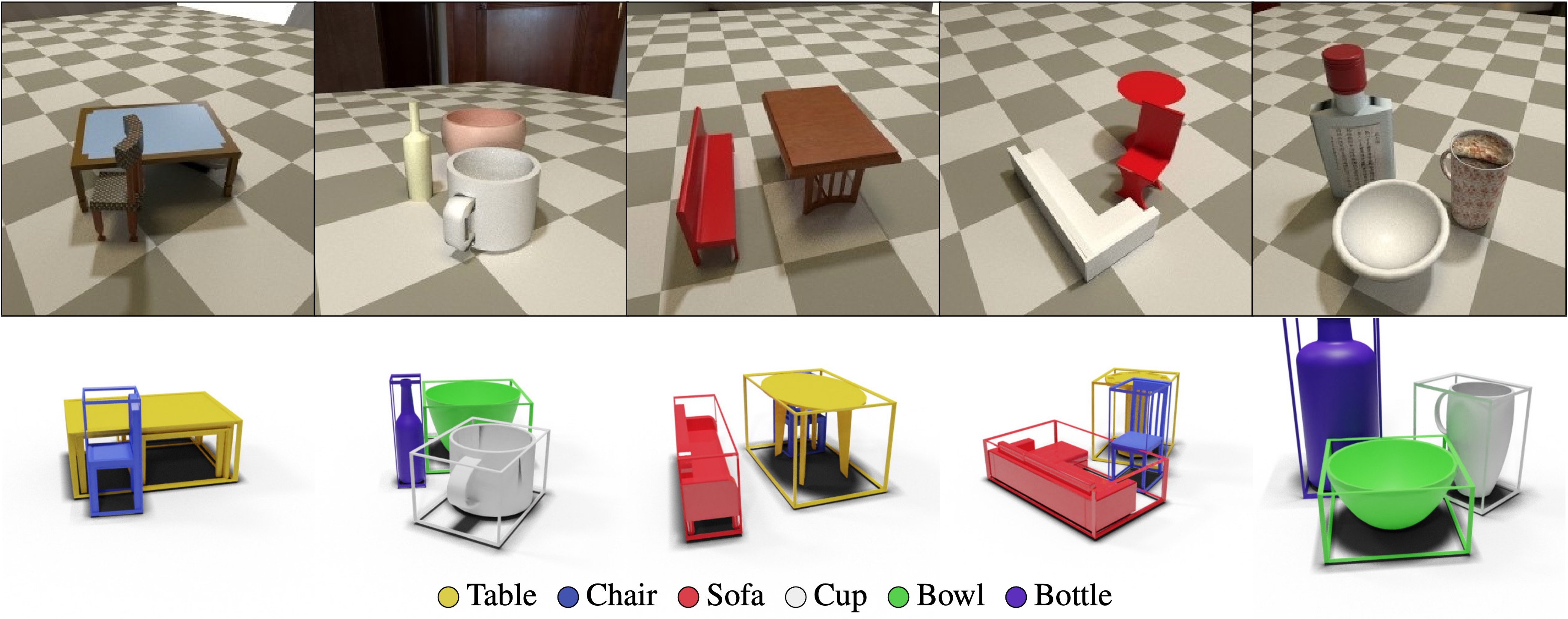

Results on synthetic images.

Results on real images.

Video

Publication

Poster

Code and Datasets

Tensorflow 2 Code [link] Multi-object synthetic CoReNet dataset [link]Code on GitHub contains pre-processing steps (WIP)

BibTeX

@inproceedings{engelmann2020points,

author={Engelmann, Francis and Rematas, Konstantinos and Leibe, Bastian and Ferrari, Vittorio},

title={{From Points to Multi-Object 3D Reconstruction}},

booktitle = {{IEEE Conference on Computer Vision and Pattern Recognition (CVPR)}},

year = {2021}

}